Biography

I obtained my Ph.D. degree in Shanghai Jiao Tong University in 2020.09, under the supervision of Kai Yu and Yanmin Qian. During the Ph.D. my research interests include deep learning based approaches for speaker recognition, speaker diarization and voice activity detection. After my graduation, I joined Tencent Games as a senior researcher, where I (informally) led a speech group and extended the research interest to speech synthesis, voice conversion, music generation and audio retrivial. Currently, I am with the SpeechLab in Shenzhen Research Institute of Big Data, Chinese University of Hong Kong (Shenzhen) led by Haizhou Li.

I am the creator of Wespeaker, a research and product oriented speaker representation learning toolkit. You can check my tutorial to see what you can do using speaker modeling and how to easily apply wespeaker to your tasks. Welcome to use and contribute!

Services: I serve as a regular reviewer for speech/deep learning related conferences/journals: Interspeech, ICASSP, ICME, SPL, TASLP, Neural Networks and Pattern Recognition. I will serve as the publication chair for SLT 2024.

Openings: We are actively seeking self-motivated students to join our team as research assistants, visiting students and potential Ph.D. students. Multiple positions are immediately available in Shenzhen, with competitive salary and benefits. If you are interested, please drop me an email with your CV.

Interests

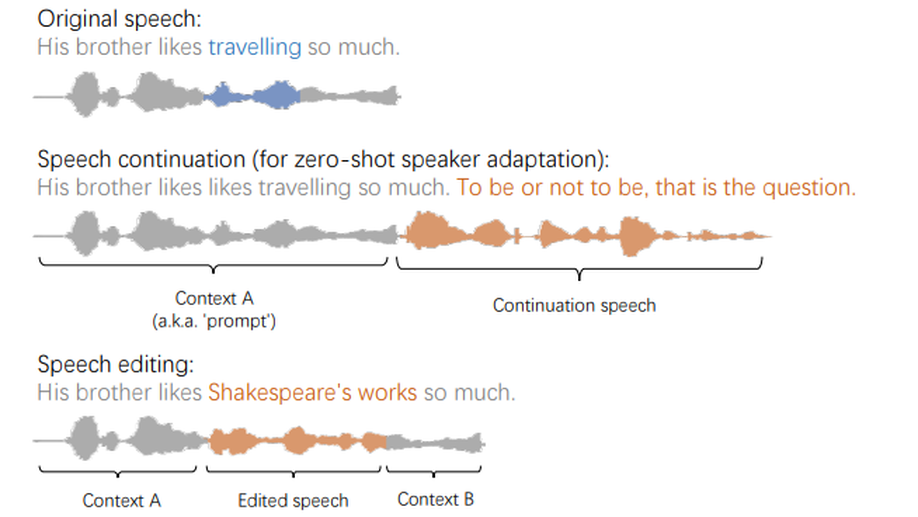

- Voice conversion

- Speech synthesis

- Speaker recognition

- Speaker diarization

- Target speech extraction

Education

-

PhD in Computer Science and Technology, 2020

Shanghai Jiao Tong University

-

BSc in Software Engineering, 2014

Northwestern Polytechnical University